Deepchecks – AI-Powered Testing & Validation Tool

Introduction to DeepchecksDeepchecks is a powerful AI tool designed to automate the testing, validation, and monitoring of machine learning models. It ensures models perform reliably in real-world environments by detecting errors, drift, and inconsistencies during the development lifecycle.

How Deepchecks WorksDeepchecks integrates directly into machine learning workflows. It runs a comprehensive set of checks on datasets and models to uncover common issues like data leakage, distribution drift, or model bias. With modular testing suites, it adapts to various ML use cases.

- Data Integrity Checks: Identifies anomalies, duplicates, and missing values in datasets.

- Model Performance Monitoring: Tracks accuracy, precision, and recall over time.

- Bias Detection: Highlights fairness issues across demographic groups.

- Drift Detection: Flags changes in data distribution between training and production sets.

Deepchecks is built for data scientists, ML engineers, and businesses seeking to build trustworthy AI systems. Its automation and customization features make it a key part of robust ML pipelines.

- Reliable Model Validation: Pinpoints problems before deployment.

- Customizable Testing Suites: Offers flexibility to match project-specific needs.

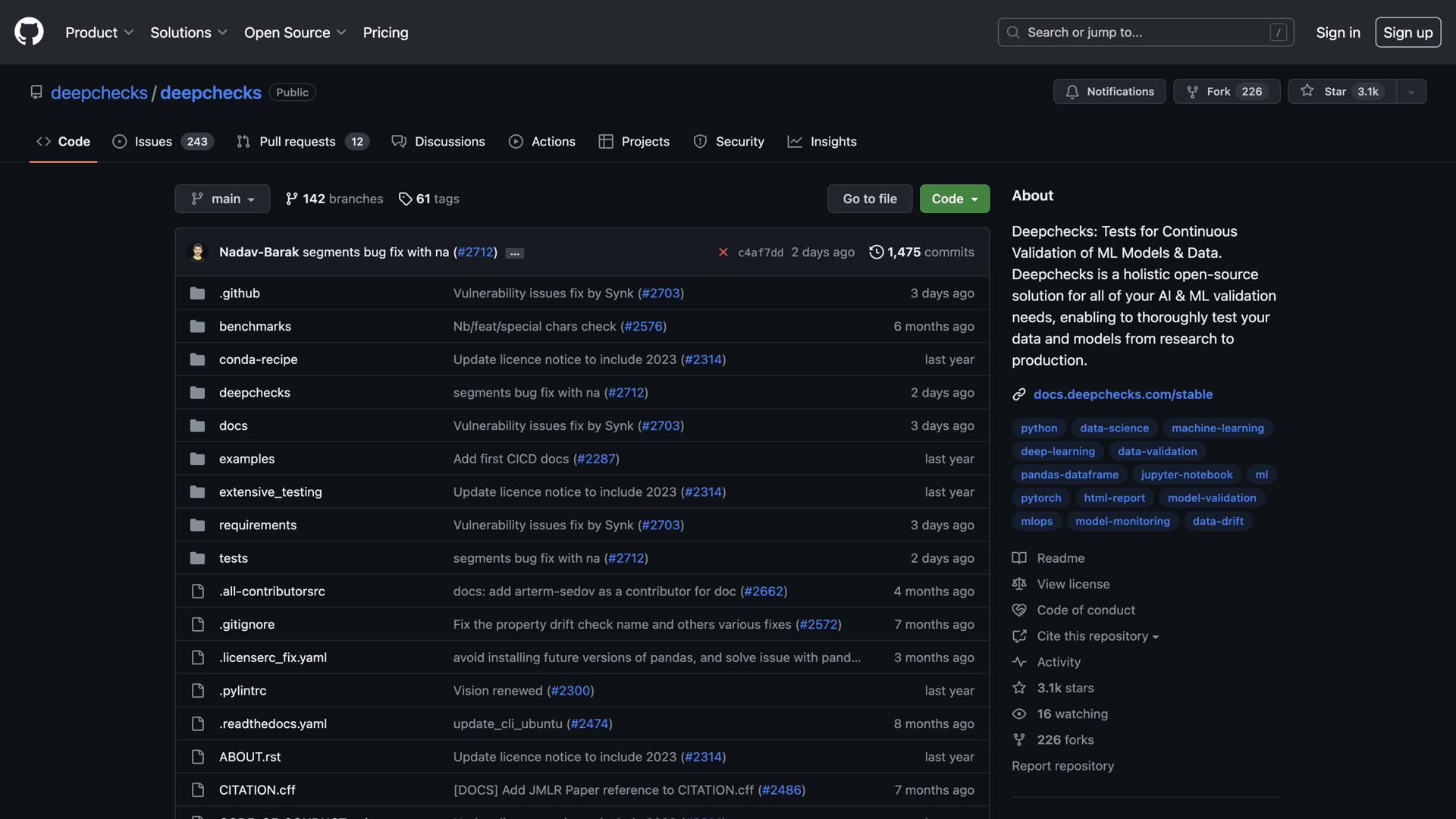

- Open-Source Friendly: Supports integration with popular ML frameworks.

- Continuous Monitoring: Keeps track of model health in production.

Deepchecks provides a range of features that make model testing and validation easier, faster, and more accurate.

- Out-of-the-Box Test Suites: Includes predefined checks for classification and regression models.

- Explainable Outputs: Delivers clear visualizations and summaries of test results.

- Pipeline Integration: Easily connects with CI/CD systems and Jupyter Notebooks.

- Scalability: Works efficiently with small or large-scale datasets.

Deepchecks is ideal for professionals across the machine learning lifecycle. It supports a wide range of applications from academic research to enterprise ML systems.

- Data Scientists: Ensures clean data and reliable models.

- ML Engineers: Integrates checks into automated pipelines.

- QA Analysts: Adds a new layer of testing for AI models.

- Tech Teams: Maintains consistent model performance over time.

Deepchecks strengthens the AI development process by adding layers of transparency and quality assurance. It helps teams detect hidden issues, optimize performance, and build trust in their machine learning models, whether they're in development or already deployed.

ConclusionDeepchecks brings confidence and reliability to machine learning workflows. With automated tests, real-time monitoring, and bias detection, it empowers teams to create and maintain high-quality AI models with ease.