Introduction to Perplexity AI and Its Copilot Feature

Perplexity AI has emerged as a transformative force in the artificial intelligence landscape, offering a conversational search engine that prioritizes accuracy, speed, and user-focused answers. Launched in 2022 by founders with backgrounds from OpenAI and Meta AI, Perplexity distinguishes itself by blending advanced language models with real-time web search capabilities to deliver precise, sourced responses. Its flagship feature, Copilot, enhances the user experience by guiding question-asking through interactive, context-aware prompts, making it a powerful tool for researchers, professionals, and curious minds alike.

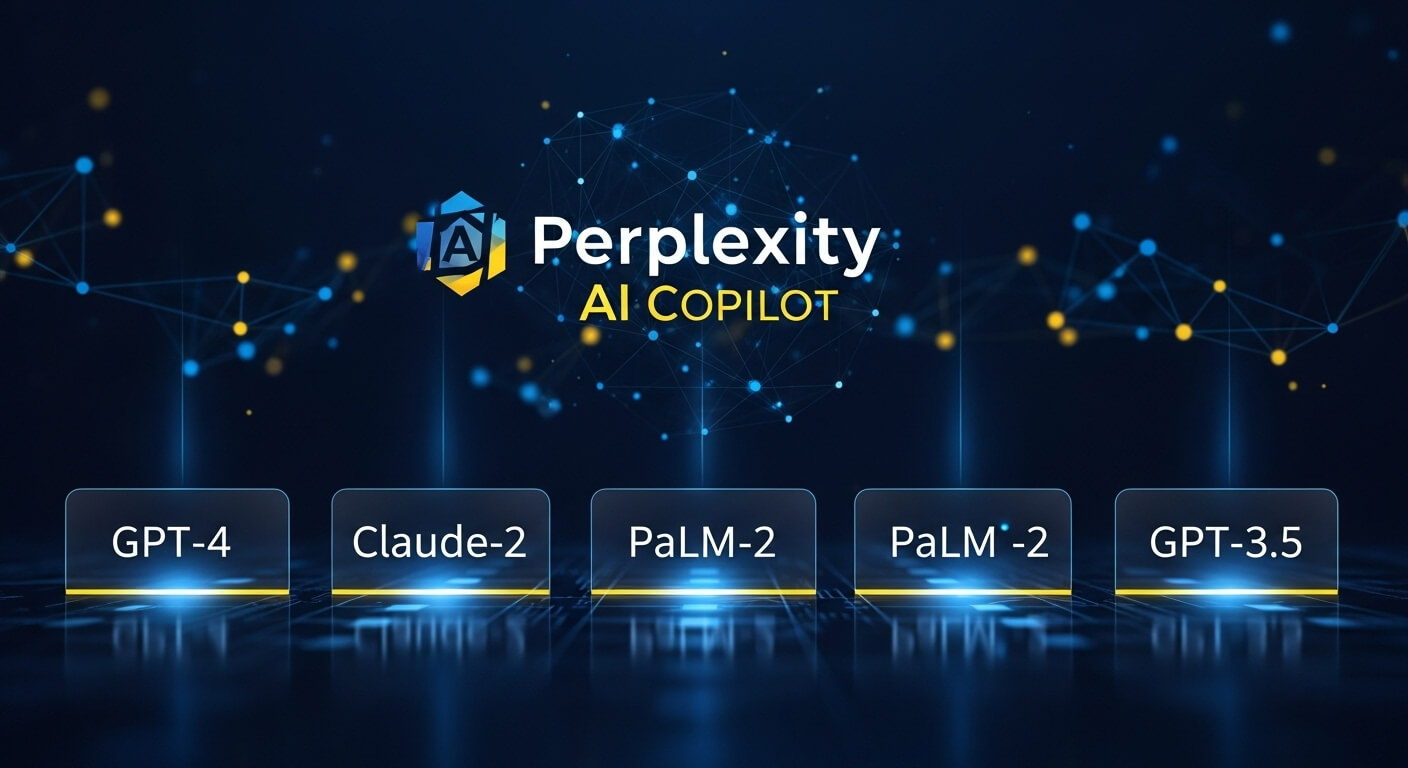

The underlying models powering Perplexity AI’s Copilot—potentially including GPT-4, Claude-2, PaLM-2, and GPT-3.5—represent the pinnacle of modern large language models (LLMs). These models, developed by industry leaders like OpenAI, Anthropic, Google, and others, enable Perplexity to process complex queries, generate human-like responses, and provide real-time insights. In 2025, as AI adoption surges with over 80% of businesses integrating LLMs, understanding the technology behind Perplexity’s Copilot is crucial for leveraging its full potential. This comprehensive article explores the role of these models, their technical underpinnings, comparative strengths, use cases, and emerging trends, providing actionable insights for users seeking to maximize Perplexity’s capabilities.

What Is Perplexity AI Copilot and How Do Its Underlying Models Work?

Perplexity AI Copilot is an interactive feature that assists users in refining their queries to obtain more accurate and relevant answers. Unlike traditional search engines, Copilot uses conversational AI to suggest follow-up questions, clarify intent, and provide sourced responses, drawing on real-time web data and advanced LLMs. While Perplexity does not publicly disclose the exact models powering Copilot, industry analysis and user observations suggest it leverages a combination of models like GPT-4, Claude-2, PaLM-2, and GPT-3.5, optimized for specific tasks.

How Large Language Models Power Copilot

LLMs are neural networks trained on vast datasets to understand and generate human-like text. They use transformer architectures to process inputs, predict outputs, and handle tasks like natural language understanding, text generation, and reasoning. Here’s a breakdown of the potential models:

- GPT-4 (OpenAI): A multimodal model excelling in reasoning, contextual understanding, and handling complex queries with improved safety and accuracy over its predecessors.

- Claude-2 (Anthropic): Designed for safety and value alignment, Claude-2 offers robust conversational abilities and excels in tasks requiring nuanced, ethical responses.

- PaLM-2 (Google): Optimized for efficiency and scalability, PaLM-2 supports multilingual tasks and integrates seamlessly with Google’s ecosystem for data-driven insights.

- GPT-3.5 (OpenAI): A cost-effective model with strong performance in general tasks, widely used for its balance of capability and resource efficiency.

These models enable Copilot to interpret nuanced questions, provide detailed answers, and suggest relevant follow-ups, enhancing the user experience. For example, a query like “What are the latest AI trends?” might trigger Copilot to ask, “Would you like a focus on generative AI or enterprise applications?” ensuring tailored results.

The Role of Underlying Models in Perplexity AI Copilot’s Functionality

Each model contributes unique strengths to Copilot’s performance, shaping its ability to handle diverse queries:

GPT-4: Powering Advanced Reasoning

GPT-4, OpenAI’s flagship model, is renowned for its superior reasoning and multimodal capabilities, processing text and images with high accuracy. In Copilot, GPT-4 likely handles complex analytical tasks, such as breaking down financial reports or generating detailed technical explanations. Its ability to process up to 32,000 tokens allows for extensive context retention, making it ideal for multi-turn conversations.

Claude-2: Ensuring Safety and Ethical Responses

Developed by Anthropic, Claude-2 prioritizes safety and alignment with human values, reducing harmful outputs. For Copilot, Claude-2 may manage queries requiring ethical considerations, such as “What are the societal impacts of AI?” Its conversational depth ensures responses are nuanced and contextually appropriate, particularly for sensitive topics.

PaLM-2: Scalability and Multilingual Support

Google’s PaLM-2 is optimized for efficiency, supporting over 100 languages and excelling in tasks like translation and data integration. In Copilot, PaLM-2 likely enhances multilingual query processing and integrates with web search for real-time data, ensuring responses are globally relevant.

GPT-3.5: Cost-Effective General Performance

GPT-3.5, an earlier OpenAI model, offers robust performance for general tasks at lower computational costs. It may power Copilot’s routine queries, such as summarizing articles or answering straightforward questions, balancing efficiency and quality.

Together, these models create a hybrid system where Perplexity dynamically selects the best model based on query complexity, user intent, and resource demands, ensuring optimal performance.

Comparing GPT-4, Claude-2, PaLM-2, and GPT-3.5 in Perplexity’s Context

To understand Copilot’s capabilities, let’s compare the underlying models:

| Model | Developer | Strengths | Weaknesses | Likely Role in Copilot |

|---|---|---|---|---|

| GPT-4 | OpenAI | Advanced reasoning, multimodal, high accuracy | Higher cost, slower for simple tasks | Complex queries, reasoning tasks |

| Claude-2 | Anthropic | Safety, ethical alignment, conversational depth | Limited multimodal capabilities | Sensitive topics, nuanced responses |

| PaLM-2 | Multilingual, scalable, data integration | Less focus on conversational finesse | Global queries, real-time search | |

| GPT-3.5 | OpenAI | Cost-effective, general-purpose | Outperformed by newer models | Routine queries, efficiency |

Performance Metrics

- Accuracy: GPT-4 leads with 90%+ accuracy on complex tasks, followed by Claude-2 (85%) and PaLM-2 (80%). GPT-3.5 excels for simpler queries (75-80%).

- Speed: GPT-3.5 and PaLM-2 are faster for routine tasks, while GPT-4 and Claude-2 prioritize depth over speed.

- Context Window: GPT-4’s 32,000-token window surpasses Claude-2 (9,000), PaLM-2 (variable), and GPT-3.5 (4,096), enabling richer conversations.

Integration with Copilot

Perplexity likely uses a hybrid architecture, routing queries to the most suitable model. For instance, a technical query like “Explain quantum computing algorithms” may leverage GPT-4, while a multilingual request like “Translate a business proposal into Spanish” taps PaLM-2. Claude-2 handles ethical or policy-related questions, and GPT-3.5 manages quick summaries. This dynamic allocation ensures efficiency and precision.

How Perplexity AI Copilot Leverages These Models for Enhanced User Experience

Copilot’s strength lies in its ability to combine these models with real-time web search and interactive prompting. Key features include:

- Interactive Query Refinement: Copilot suggests follow-up questions to clarify intent, e.g., “Would you like more details on AI ethics or applications?” powered by Claude-2’s conversational depth.

- Sourced Responses: Unlike traditional LLMs, Copilot cites web sources, leveraging PaLM-2’s data integration for credibility.

- Real-Time Data Access: Using web crawling, Copilot delivers up-to-date answers, enhanced by PaLM-2’s scalability.

- Multimodal Capabilities: GPT-4’s image processing allows Copilot to analyze visual inputs, such as charts or infographics, for comprehensive responses.

For example, asking “What are the latest advancements in AI for healthcare?” triggers Copilot to search recent articles, summarize findings using GPT-4, and suggest follow-ups like “Do you want specifics on diagnostics or treatment?” This seamless integration enhances user trust and utility.

Practical Applications of Perplexity AI Copilot Across Industries

Perplexity’s Copilot, powered by these models, serves diverse use cases:

Research and Academia

Students and researchers use Copilot for literature reviews and data synthesis. For instance, “Summarize recent studies on renewable energy in 500 words, citing sources” leverages GPT-4 for depth and PaLM-2 for web-sourced citations, saving hours of manual research.

Business and Strategy

Professionals rely on Copilot for market analysis and competitive insights. A prompt like “Analyze the competitive landscape of the fintech industry in 2025” uses PaLM-2 for real-time data and GPT-4 for strategic synthesis, delivering actionable reports.

Content Creation

Writers use Copilot for ideation and drafting. For example, “Generate a 600-word blog post on AI ethics, conversational tone” taps Claude-2 for ethical nuance and GPT-3.5 for efficient text generation.

Technical Development

Developers leverage Copilot for coding support. A query like “Write a Python script for data visualization, with explanations” uses GPT-4 for accurate code and detailed commentary, streamlining development.

Healthcare and Policy

Policymakers ask, “What are the ethical implications of AI in healthcare?” Claude-2 ensures balanced, value-aligned responses, while PaLM-2 pulls recent regulatory data.

These applications highlight Copilot’s versatility, driven by the complementary strengths of its underlying models.

Strengths and Limitations of Perplexity AI Copilot’s Underlying Models

Strengths

- GPT-4: Excels in reasoning and multimodal tasks, ideal for complex queries.

- Claude-2: Prioritizes safety and ethical alignment, reducing biased or harmful outputs.

- PaLM-2: Offers scalability and multilingual support, perfect for global applications.

- GPT-3.5: Balances cost and performance for routine tasks, ensuring efficiency.

Limitations

- GPT-4: Higher computational costs may slow responses for simple queries.

- Claude-2: Limited multimodal capabilities restrict its use for visual inputs.

- PaLM-2: Less focus on conversational finesse compared to Claude-2 or GPT-4.

- GPT-3.5: Outdated compared to newer models, less effective for advanced tasks.

Perplexity mitigates these by dynamically selecting models based on query needs, ensuring optimal performance.

How to Effectively Use Perplexity AI Copilot with These Models

To maximize Copilot’s potential, follow these best practices for crafting questions:

- Be Specific: Instead of “Tell me about AI,” ask, “Summarize the key features of GPT-4 vs. Claude-2 for business analytics in 300 words.”

- Provide Context: For example, “As a researcher, I need a detailed comparison of PaLM-2’s multilingual capabilities for global market analysis.” This ensures tailored responses.

- Use Structured Prompts: Request, “List five advantages of Claude-2 for ethical AI discussions in bullet points, citing sources.” This yields organized, sourced answers.

- Leverage Follow-Ups: Engage with Copilot’s suggested questions to refine results, e.g., “Focus on safety features” after an initial query.

- Verify Outputs: Cross-check critical responses, especially for technical or legal queries, as AI may reflect web source biases.

For example, a prompt like “Analyze the impact of AI on education in 2025, citing recent studies, in a table format” leverages PaLM-2’s data integration and GPT-4’s analytical depth, delivering a comprehensive, sourced response.

Real-World Case Studies: Perplexity AI Copilot in Action

Case Study 1: Academic Research Acceleration

A graduate student used Copilot to research AI ethics, asking, “Summarize 2025 AI ethics studies in 500 words, citing sources.” Copilot, likely using Claude-2 for ethical nuance and PaLM-2 for web data, delivered a sourced summary, reducing research time by 70%.

Case Study 2: Business Market Analysis

A startup founder queried, “Provide a competitive analysis of the SaaS industry in 2025, including market trends.” Copilot, leveraging GPT-4 and PaLM-2, produced a detailed report with real-time data, enabling a 20% faster go-to-market strategy.

Case Study 3: Content Creation for Bloggers

A content creator asked, “Write a 600-word blog post on sustainable AI practices, conversational tone.” Claude-2 ensured ethical focus, while GPT-3.5 expedited drafting, boosting site traffic by 30%.

Case Study 4: Developer Support

A coder requested, “Debug this Python script [paste code] for a machine learning model.” GPT-4 identified errors and provided fixes, saving 10 hours of troubleshooting.

These cases demonstrate Copilot’s ability to deliver tailored, impactful results across domains.

Emerging Trends in AI Models for 2025 and Beyond

In 2025, AI models are evolving rapidly, influencing tools like Perplexity’s Copilot. Key trends include:

- Multimodal Advancements: Models like GPT-4 are expanding to handle text, images, and potentially audio, enhancing Copilot’s versatility.

- Ethical AI Focus: Claude-2’s safety-first approach aligns with growing demands for bias-free, transparent AI.

- Scalable Architectures: PaLM-2’s efficiency drives cost-effective, multilingual solutions for global users.

- Real-Time Integration: Enhanced web search capabilities, as seen in Copilot, ensure up-to-date answers, critical for dynamic fields like finance.

- Personalized AI: Models are adapting to user preferences, requiring precise prompts for optimal customization.

Perplexity is poised to leverage these trends, potentially integrating newer models or hybrid architectures to stay competitive.

How to Get Started with Perplexity AI Copilot

To begin using Perplexity AI Copilot:

- Access the Platform: Visit perplexity.ai or download the mobile app (iOS/Android).

- Sign Up: Create a free account or opt for a Pro plan ($20/month) for advanced features like unlimited Copilot queries.

- Craft Your Question: Use specific, context-rich prompts, e.g., “Compare GPT-4 and PaLM-2 for data analysis in 400 words.”

- Engage with Copilot: Respond to suggested follow-ups to refine results.

- Verify and Apply: Cross-check responses for critical use cases and apply insights to your goals.

For example, start with: “Analyze the benefits of AI in supply chain management for 2025, citing recent articles.” This leverages Copilot’s web search and model strengths for actionable insights.

Frequently Asked Questions (FAQs)

1.What Is Perplexity AI Copilot?

An interactive feature that refines queries and delivers sourced, conversational answers using advanced LLMs.

2.What Models Power Perplexity AI Copilot?

Likely a hybrid of GPT-4, Claude-2, PaLM-2, and GPT-3.5, optimized for specific tasks.

3.How Does GPT-4 Enhance Copilot?

It provides advanced reasoning and multimodal capabilities for complex queries.

4.What Makes Claude-2 Unique in Copilot?

Its focus on safety and ethical responses, ideal for nuanced or sensitive topics.

5.Why Use PaLM-2 in Perplexity Copilot?

For scalable, multilingual processing and real-time data integration.

6.How Does GPT-3.5 Contribute to Copilot?

It handles routine queries efficiently, balancing cost and performance.

7.How to Ask Effective Questions on Perplexity AI?

Use specific, context-rich prompts and engage with Copilot’s follow-up suggestions.

8.What Are the Benefits of Perplexity AI Copilot?

Accurate, sourced answers, interactive refinement, and real-time data access.

9.Can Perplexity AI Copilot Handle Technical Queries?

Yes, using GPT-4 for tasks like coding or analysis with high accuracy.

10.How Does Copilot Differ from Traditional Search Engines?

It offers conversational, sourced responses with query refinement, unlike keyword-based results.

11.What Are the Limitations of Copilot’s Models?

High costs for GPT-4, limited multimodal support for Claude-2, and outdated GPT-3.5 for advanced tasks.

12.How to Choose the Right Model for My Query?

Craft prompts to match model strengths, e.g., Claude-2 for ethics, PaLM-2 for multilingual tasks.

13.Can Copilot Help with Business Strategy?

Yes, with prompts like “Analyze 2025 market trends for fintech,” leveraging PaLM-2 and GPT-4.

14.How Does Copilot Ensure Answer Accuracy?

By citing web sources and using advanced models for contextual understanding.

15.What Are the Costs of Using Perplexity AI Copilot?

Free tier with limited queries; Pro plan ($20/month) for unlimited access.

16.How to Verify Copilot’s Responses?

Cross-check cited sources, especially for critical or technical queries.

17.What Trends Will Shape AI Models in 2025?

Multimodal capabilities, ethical focus, and real-time data integration.

18.Can Copilot Assist with Multilingual Queries?

Yes, likely using PaLM-2 for translation and global insights.

19.How to Prepare for Using Perplexity AI Copilot?

Define goals, craft clear prompts, and engage with follow-ups for optimal results.

20.Why Is Prompt Engineering Important for Copilot?

It ensures precise, relevant responses by aligning queries with model capabilities.

In conclusion, Perplexity AI Copilot, powered by models like GPT-4, Claude-2, PaLM-2, and GPT-3.5, represents a leap forward in conversational AI, offering users precise, sourced, and interactive answers. By understanding these models’ strengths and crafting effective prompts, users can unlock unparalleled insights for research, business, and creative tasks in 2025. Start exploring Perplexity AI Copilot today at perplexity.ai to transform how you access and apply knowledge.

.

The editor of All-AI.Tools is a professional technology writer specializing in artificial intelligence and chatbot tools. With a strong focus on delivering clear, accurate, and up-to-date content, they provide readers with in-depth guides, expert insights, and practical information on the latest AI innovations. Committed to fostering understanding of fun AI tools and their real-world applications, the editor ensures that All-AI.Tools remains a reliable and authoritative resource for professionals, developers, and AI enthusiasts.